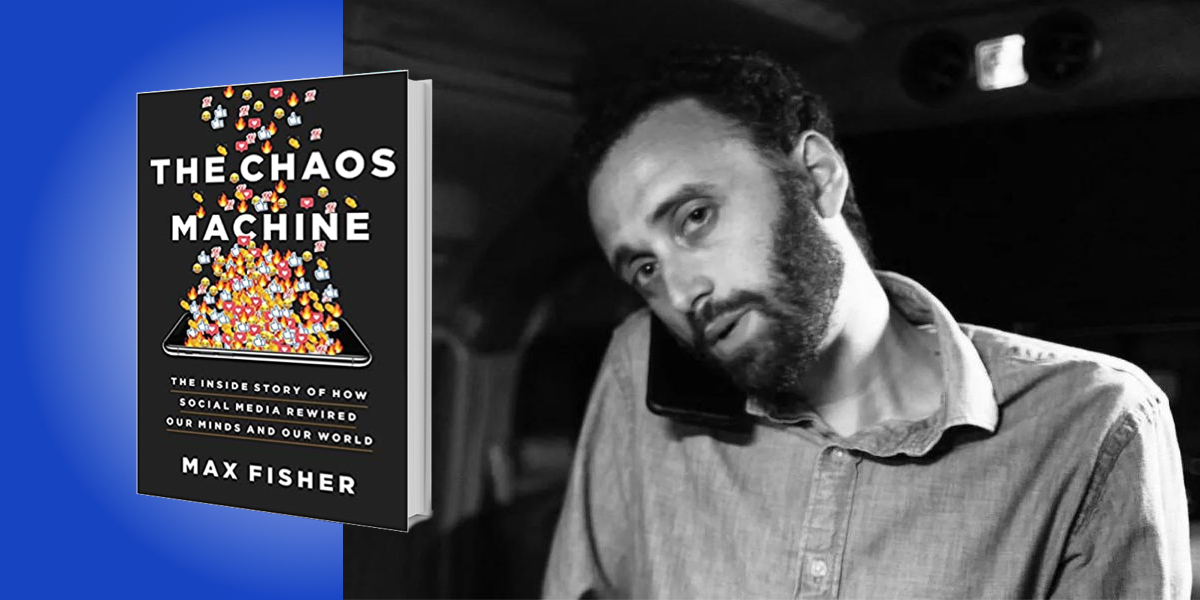

Max Fisher is an international reporter and columnist at the New York Times, where he covers global trends and major world events. He contributed to a series about social media that was a finalist for the Pulitzer Prize in 2019.

Below, Max shares 5 key insights from his new book, The Chaos Machine: The Inside Story of How Social Media Rewired Our Minds and Our World. Listen to the audio version—read by Max himself—in the Next Big Idea App.

1. Social platforms are lying to you.

When you open social media, you think that you’re seeing the views, sentiments, and political opinions of your community. You think it’s the world being reflected through the platforms, but you’re seeing a lie that the platform tells you to manipulate you.

You’re actually seeing the choices made by the platform’s artificial intelligence systems, which have combed through vast amounts of online content (more than you could take in yourself) and selected a tiny proportion of those posts to show you. Then it ordered and sequenced them in a way that those systems have learned will be maximally effective at keeping you engaged for as long as possible.

The social platforms designed these systems—for which they employ thousands of engineers, including some of the world’s top names in artificial intelligence—to deliberately turn your greatest cognitive and emotional weaknesses against you. Those systems draw (and this used to be openly discussed in Silicon Valley, before they learned to hide it) from the largest pools of private user data ever assembled to learn precisely what will best manipulate you.

They also draw from of the darkest corners of human psychology—everything from the science of addiction behind casinos, whose influence you can see in the slot-machine-like colors and sounds of social platforms, to the science of how we identify truth or separate right from wrong. Vastly sophisticated AIs are at work every second of every day deconstructing our deepest impulses and frailties so that it can turn them against us.

2. Social platforms are training you.

Social platforms keep us scrolling and tapping for as long as possible so that they can show us more of the ads that make them billions of dollars every year. In 2014, according to Census Bureau data, for the first time the average American spent more time socializing on social media than socializing in person, and the gap has widened every year since. Social media is now the predominant way we consume news and information, learn about our world, and relate to one another.

Social media platforms learned to train you into certain behaviors and feelings so that you will post in ways that make both you and the users you interact with likelier to spend more time on the platform. In training you, the platforms change who you are.

“Social media is now the predominant way we consume news and information, learn about our world, and relate to one another.”

For example, there’s an experiment where researchers tested participants on their baseline propensity for outrage before telling them to post on Twitter (it was fake Twitter, so the researchers could control the experience). Half of the subjects were told to send a tweet expressing outrage. They showed those subjects that their tweets had gotten a bunch of likes and shares. Many other studies into the social platforms have shown that social media sites artificially amplify any post with outrage in it. The platforms know that outrage performs well, so the platform blasts outraged posts to lots of people, resulting in particularly high engagement with that post. So, the researchers showed those fake tweets to the research subjects with all that engagement on them. They did this a few times. Soon, all the research subjects, even the ones who had been identified as averse to outrage, started internalizing it.

They thought they were getting all this positive feedback from their community, all this affirmation and attention for expressing outrage, when really it was the platform tricking them. Sure enough, those users wanted to post more outraged tweets, with each post more outraged than the last. But what blew my mind is that the research subjects became more prone to outrage when they were offline, away from social media. That faked sense of social reward had been delivered so powerfully that it changed their underlying nature as human beings.

This training process is happening to all of us, every single day. And it’s more than just outrage being drilled into us. Understanding that was a big first step for me in documenting the consequences of social media for our world.

3. The consequences reach into every facet of life.

The algorithms that choose what we see, what goes viral, and how content is presented are essentially putting a car battery to our deepest and sometimes darkest instincts. They feed our inner beast in the process of keeping us scrolling and posting and further exacerbating the platforms’ effects on our communities. There’s one example I think of in particular.

Seven villages in different parts of Indonesia, with no connection to one another, had simultaneously risen up in spontaneous mob violence. In each instance, it was against some innocent man from outside the village who happened to be traveling through. It turned out that a single viral rumor had appeared on Facebook. That rumor had initially come from a small account with no real audience, but the platform had identified it—with the kind of precision that only a machine trained on billions of online interactions could identify—as freakishly engaging, by hitting on exactly the kind of conspiracy and identity panic which triggers collective outrage, thus driving engagement.

“The algorithms that choose what we see, what goes viral, and how content is presented are essentially putting a car battery to our deepest and sometimes darkest instincts.”

The system had pushed it out to so many users, so aggressively and so quickly, and in a way that hijacked those users’ instincts for collective action, that those seven villages rose up into violence at once. This was in early 2018, and a few months later I just happened to go to Facebook’s headquarters in Silicon Valley for a different story and met some of the people who lead responses to exactly these sort of crises. I told them about the incident in Indonesia, and they kind of shrugged and said okay—didn’t ask any follow-ups, didn’t ask the names of the villages, or who my original source was.

The specific rumor that caused the chaos over there might sound familiar to you: it claimed that shadowy elites were in league with minorities to kidnap local children and harvest their organs. The reason it might sound familiar is that a few months later, a version of that same rumor was ferociously promoted by Facebook and YouTube to millions of Americans, under the name QAnon. Within a few months, an almost identical rumor was pushed by the platforms in a half-dozen other countries, such as Mexico and Germany, as if the system was converging on this bizarre macabre rumor as some sort of skeleton key for boosting engagement.

This demonstrates the power that these systems can wield, which is ubiquitous but can feel so shocking and seismic when it’s revealed through crisis. But this story also underscores that there’s no one at the wheel. This is a machine with no human driver, no overseer, more or less free to do with us and our societies and our politics whatever it wants.

4. Turning it off is not a good enough answer.

What power does an individual have when literally the largest companies by market capitalization in human history have utterly conquered our individual attention and the very modes by which we engage with one another and understand reality itself? I’d like to tell you that the answer is to just turn it off. Generally, that is good advice, given the research showing that even a couple weeks away from social media substantially improves well-being.

There was one experiment in which researchers had test subjects switch off Facebook for four weeks—just that one platform—and compared to the control group of subjects who stayed on social media, the ones who shut it off became substantially happier and less anxious because they disconnected from these systems that are designed to hook users through negative emotions, like outrage and identity conflict. In fact, the boost in life satisfaction was equivalent to 25 to 40 percent of the effect of going to therapy. Just from deleting an app. The same study found that the people who had shut off social media immediately became dramatically less affected by partisan polarization. The drop in polarization among those people was equivalent to about half of the total rise in polarization in America from 1996 to 2018, which is to say that it was like rolling back two decades of political toxicity and division.

“The same study found that the people who had shut off social media immediately became dramatically less affected by partisan polarization.”

Still, turning it off is not a good enough answer. As nice as it sounds, most of us don’t have the luxury of just turning it off. And even if by some miracle you can completely shut off from those digital worlds, you are still affected by the consequences of those platforms. They’re still poisoning the world around you. Telling people that the solution is deleting some apps is a little like saying, “Hey, there’s a giant factory polluting the water you drink, so just buy bottled water!”

5. Understanding is the first line of defense.

There are two things that you can do, beyond trying to spend less time on social media. Understand that it hides its influence in what it shows you. You’re being saturated in and trained to follow the platforms’ preferences and the platforms’ desired politics and the platforms’ desired emotional sentiments, but they’re smuggled through what looks like your peers, friends, and community.

Also, understand how your own mind works. Social media’s power comes from co-opting your mental machinery, but the truth of how your mind works is not necessarily what you would think it is. How we learn, how we determine right and wrong, how we differentiate what is convincing or persuasive from what is not, are all questions that have received substantial study in the last ten years. Social platforms have become incredibly sophisticated in understanding and exploiting basic elements of our nature, but if you can understand your own nature and the flaws that come with being human, then you’ll be able to identify and gird yourself against technological influence.

To listen to the audio version read by author Max Fisher, download the Next Big Idea App today: