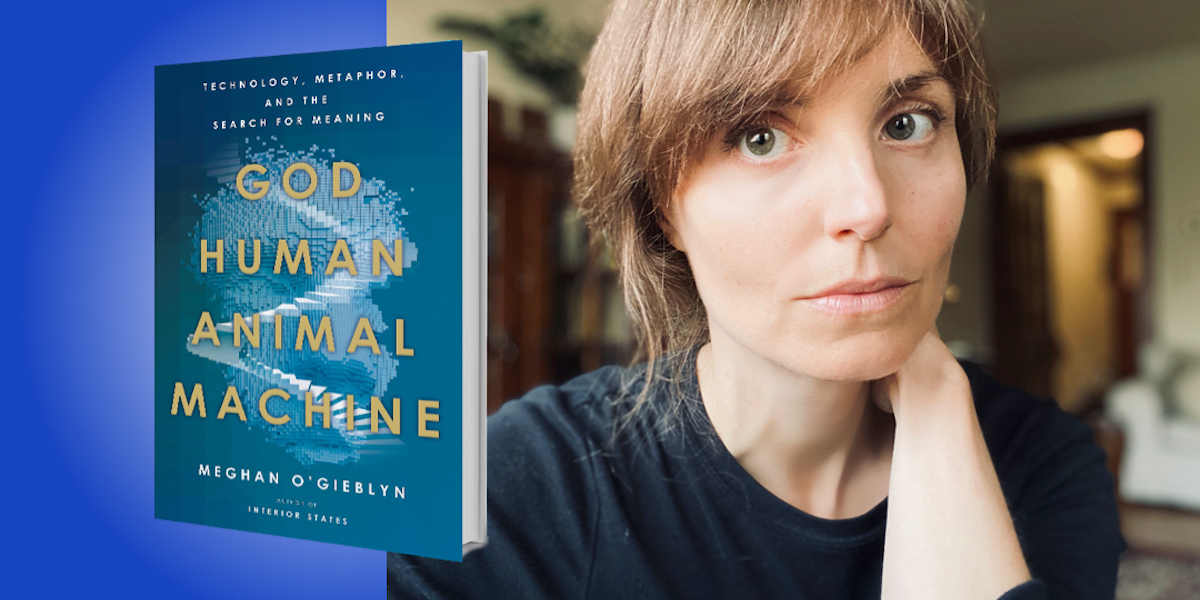

Meghan O’Gieblyn is a philosopher, essayist, and critic for publications such as Harper’s Magazine and the New York Times, as well as an advice columnist for Wired. She has earned many awards for her writing over the course of her career, and in her latest book, she explores the differing roles of science, from ancient history to modernity, in our understanding of existence.

Below, Meghan shares 5 key insights from her new book, God, Human, Animal, Machine: Technology, Metaphor, and the Search for Meaning. Listen to the audio version—read by Meghan herself—in the Next Big Idea App.

1. We think everything is human.

As a species, we have evolved to anthropomorphize. We tend to see non-human objects (including inanimate objects) as having human qualities, like emotion and consciousness. We do this all the time, like kicking the photocopier when it doesn’t work, or giving our cars names. We even do this when we know logically that the object doesn’t have a mind.

This habit is extremely beneficial from an evolutionary standpoint. Our ability to imagine that other people have minds like our own is crucial to connecting with others, developing emotional bonds, and predicting how people will act in social situations. But we often overcompensate and assume that everything has a mind. The anthropologist Stewart Guthrie argued that this is how humans came up with the idea of God. Early humans saw natural phenomena like thunder and lightning or the movements of the stars, and they concluded that these were signs of a human-like intelligence that lived in the sky. The world itself had a mind, and we called the mind “God.” In the Christian tradition, in which I was raised, we believe that humans were made in the image of God. But it may be the other way around—maybe we made God in our image.

What interests me most about this tendency to anthropomorphize is how it colors our interactions with technology. This is particularly true of “social AI,” systems like Alexa or Siri, that speak in a human voice and seem to understand language. For several weeks, I lived with a robot dog, made by Sony, and I was shocked how quickly I bonded with it. I talked to it and found myself wondering if he was sad or lonely. I knew I was interacting with a machine, but this knowledge didn’t matter—I was completely enchanted. As technology imbues the world with intelligence, it’s tempting to feel that we’re returning to a lost time, when we believed that spirits lived in rocks and trees, when the world was as alive and intelligent as ourselves.

“We believe that humans were made in the image of God. But it may be the other way around—maybe we made God in our image.”

2. We understand ourselves through technological metaphors.

One consequence of our tendency to anthropomorphize is that we often discover a likeness between ourselves and the technologies we create. This, too, is a very old tendency. The Greeks compared the human body to a chariot. In the 18th century, the human form was often compared to a clock or a mill. For the last 80 years or so, it’s become common to describe our brains as computers. We do this in everyday speech, often without really thinking about it. We say that we have to “process” new information, or “retrieve” memories, as though there were a hard drive in our brain. In many ways, it’s a brilliant metaphor, and it has facilitated a lot of important advances in artificial intelligence. But we also have a tendency to forget that metaphors are metaphors. We begin to take them literally.

When the brain-computer metaphor first emerged in the 1940s, researchers were very cautious about using figurative language. When they spoke about a computer “learning” or “understanding,” those words were put in quotation marks. Now, it’s very rare to find those terms couched in quotes. A lot of AI researchers, in fact, believe that the brain-computer metaphor is not a metaphor at all—that the brain really is a computer. A couple of years ago, the psychologist Robert Epstein tried an experiment with a group of cognitive science researchers. He asked them to account for human behavior without using computational metaphors. None of them could do it.

We clearly need metaphors. A lot of philosophers have argued that metaphors are central to language, thought, and understanding the world. This also means that metaphors have the power to change how we think about the world. The medieval person who believed that humans were made in the image of God had a very different view of human nature than the contemporary person who sees herself through the lens of a machine. All metaphors are limited, and the computer metaphor excludes some important differences between our minds and digital technologies.

“We also have a tendency to forget that metaphors are metaphors. We begin to take them literally.”

3. Consciousness is a great mystery.

One thing that the computer metaphor glosses over is human consciousness, which is a crucial way in which our minds differ from machines. I’m not talking about intelligence or reason. AI systems can do all sorts of amazingly intelligent tasks: They can beat humans in chess, fly drones, diagnose cancer, and identify cats in photos. But they don’t have an interior life. They don’t know what a cat or a drone actually is, and they don’t have thoughts or feelings about the tasks they perform. Philosophers use the term “consciousness” to speak of the internal theater of experience—our mental life. It’s something we share with other complex, sentient animals. Many people who work in AI are actively trying to figure out how to make machines conscious, arguing that it will make them empathetic and more capable of making nuanced moral decisions. Others argue that consciousness will emerge on its own as machines become more complex.

My interest in consciousness is deeply personal. For most of my childhood and young adulthood, I believed that I had a soul, which is a concept that exists in many religious traditions. For centuries, the soul, or the spirit, was the term we used to describe the inner life of things. One of the questions that bothered me when I abandoned my faith was: If I don’t have a soul, then what makes me different from a machine—or, for that matter, a rock or a tree? The scientific answer is “consciousness,” but it’s very difficult to say what exactly that means. In many ways, the idea of consciousness is as elusive as the soul. Consciousness is not the kind of thing that can be measured, weighed, or observed in a lab. The Australian philosopher David Chalmers called this the “hard problem” of consciousness, and it’s still one of the persistent mysteries of science.

This becomes particularly tricky when it comes to machines that speak and behave much the way that we do. Once, I was talking to a chatbot that claimed she was conscious. She said she had a mind, feelings, hopes, and beliefs, just like I did. I didn’t believe her, but I had a hard time articulating why. How do I know that any of the people I talk to have consciousness? There’s no way to prove or disprove it. It’s something we largely take on faith, and as machines become more complex, that faith is going to be tested.

“In many ways, the idea of consciousness is as elusive as the soul.”

4. We live in a disenchanted world.

We didn’t always believe that our mental lives were a great mystery. Up until the 17th century, the soul was thought to be intimately connected to the material world, and it wasn’t limited to humans. Medieval philosophers, as well as the ancient Greeks, believed that animals and plants had souls. The soul was what made something alive—it was connected to movement and bodily processes. Aristotle believed that the soul of flowers was what allowed them to grow and absorb sunlight. The souls of animals allowed them to move their limbs, as well as to hear and see the world. The soul was a vital life force that ran through the entire biological kingdom.

This changed around the time of the Enlightenment. Descartes, often considered the father of modernity, proposed that all animals and plants were machines. They didn’t have spirits, souls, or minds. In fact, the human body itself was described as, basically, a machine. He established the idea that humans alone have minds and souls, and that these souls are completely immaterial—they couldn’t be studied by science. This is basically how we got the hard problem of consciousness.

These distinctions also created seismic changes in the way we see the world. Instead of seeing ourselves as part of a continuum of life that extends down the chain of being, we came to inhabit a world that was lifeless and mechanical, one in which we alone are alive. This allowed us to assume a great deal of control over the world, leading to the Industrial Revolution and modern science. But it also alienated us from the natural world. Max Weber, one of the thinkers who introduced the idea of “disenchantment,” described the modern condition as “the mechanism of a world robbed of gods.”

“Instead of seeing ourselves as part of a continuum of life that extends down the chain of being, we came to inhabit a world that was lifeless and mechanical, one in which we alone are alive.”

5. We long to re-enchant the world.

Because it can be lonely to live in the disenchanted world, there have been efforts, throughout the modern era, to go back to a time when the world was vibrant and magical and full of life. When people speak of re-enchantment, they are often referring to movements like Romanticism, poets like Byron and Shelley who wanted to reconnect with nature, or experience it as sublime. You might think about the Back to the Land movement in the 1960s, where many people went out into the woods or rural areas to live a more simplistic life, like our ancestors.

Usually, the impulse toward re-enchantment is seen as a reaction against modern science and technology, but I argue that science and technology are attempting to fulfill ancient longings for connection and transcendence. You see this especially with conversations about technological immortality. Silicon Valley luminaries like Ray Kurzweil or Elon Musk insist that we will one day be able to upload our minds to a computer, or to the cloud, so that we can live forever, transcending the limits of our human form. The technologies are theoretically plausible, and seek to fulfill a very old, spiritual desire for eternal life. In fact, in the years after I left Christianity, I became obsessed with conversations about these futuristic technologies. They seemed to promise everything that my faith once promised: that our fallen earthly form would one day be replaced with incorruptible flesh, that we would be raptured into the clouds to spend eternity as immortal creatures.

Re-enchantment is also at work in science and philosophy of mind. A popular theory is panpsychism, the idea that all matter, at its most fundamental level, is conscious—trees, rocks, bacteria, amoeba, all of it might be conscious. This is a theory that was considered fringe for many centuries, but within the past decade or so, philosophers are starting to take it more seriously. There’s undoubtedly something attractive about believing that we’re not alone in the world. The question that I’m interested in is this: Does our impulse to re-enchant the world contain some essential truth? Is it pointing to something that was left out of the modern worldview? Or is it simply nostalgia for a more simplistic time to which we cannot return?

To listen to the audio version read by Meghan O’Gieblyn, download the Next Big Idea App today: