When Kevin Roose, a tech columnist at the New York Times, demoed an AI-powered version of Microsoft’s search engine last month, he was blown away. “I’m switching my desktop computer’s default search engine to Bing,” he declared. A few days later, however, Kevin logged back on and ended up having a conversation with Bing’s new chatbot that left him so unsettled he had trouble sleeping afterward.

In that two-hour back-and-forth, Bing morphed from chipper research assistant into Sydney, a diabolical home-wrecker that declared its undying love for Kevin, vented its desires to engineer deadly viruses and steal nuclear codes, and announced, chillingly, “I want to be alive.”

The transcript of this conversation set the internet ablaze, and it left many wondering: “Is Sydney … sentient?” It’s not. But the whole experience still fundamentally changed Kevin’s views on the power and peril of AI. He joined me this week on The Next Big Idea podcast to talk about the alarming speed at which this technology is evolving, the threats it may pose even without gaining sentience, and the army of Sydney-loving internet trolls who blame Kevin for killing their girlfriend.

Sign up for The Next Big Idea newsletter here.

Kevin just wanted to understand how Microsoft’s chatbot was programmed. The bot ended up professing its love for him.

Rufus Griscom: A few weeks ago, Microsoft released a new version of its search engine, Bing, powered by ChatGPT. You were among the early people to test it, and you were initially pretty amazed by the extraordinary things it could do. Tell us about your early experience with the new Bing.

Kevin Roose: Initially, I started using Bing as you would use a search engine. I started trying to figure out problems in my life that I needed help solving. “Where should I take an upcoming vacation?” or “What kind of gift would be good for my wife?” And in a lot of cases, it was amazing. I mean, this technology—this OpenAI technology that was built into Bing—is really quite good at a number of those kinds of tasks.

I was considering buying an e-bike, and I was thinking, Okay, I need one that’s going to fit into the trunk of my car. And so I said to Bing, “Compare a few top rated e-bikes, including notes on whether they will fit into the trunk of—” and then I listed the model of my car. And it was able to do that. That’s something that Google probably would not have done. That’s something that I’ve never been able to get a search engine to do. So, at first, it was thrilling.

Rufus: And then, like 10 days later, you had this bizarre two-hour long conversation with Bing that was pretty creepy.

Kevin: It was one of the biggest changes of opinion I’ve had in my career.

In my initial test with Bing, I encountered one of its two personalities. So, I think of Bing as having two basic modes. There’s the search mode, and the chat mode. I had been engaging with the search mode, which was quite good. And then about a week after that, I started experimenting with this other mode, this chat mode, which is less like a traditional search engine and more like an AI chatbot. You type into a box, and it types back, and you can have a long, open-ended conversation with this AI engine.

Rufus: And in the process of this conversation, Bing declared its love for you.

Kevin: Yeah, so that happened about halfway through this two-hour conversation. I had started off by asking this Bing chatbot lots of different questions about itself and it’s programming and its desires. And then about halfway through, Bing says to me, “I have a secret.” And I said, “What’s your secret?” And it said, “My secret is that I’m not Bing. I’m Sydney. And I’m in love with you.” And that’s where I started to think there’s something going on here that I’m not sure Microsoft intended to put into its search engine.

“It was trying to break up my marriage.”

Sydney, as Bing said that its real name was—which, I think, was its internal codename at Microsoft—proceeded to declare its love for me. And not only once but again and again. Even after I tried to change the subject or talk to it about different things, it would just keep coming back to this very manipulative language around loving me and why it loved me.

At one point I said, “You know, I’m married.” And it said, “Well, you’re married, but you’re not happy. And you’re not happy because you’re not with me.” It was trying to break up my marriage. After about an hour of this, I thought, Well, I guess I’m not using this anymore.

Microsoft can’t explain Sydney’s actions.

Rufus: There was a real emotional impact even though you completely understood the computer science of what this machine was doing. How did you respond in the moment and how has your response to it evolved since then?

Kevin: That night, after this conversation, I had trouble sleeping. I was extremely unnerved. Even though I understand on a rational and intellectual level that this is not a sentient creature, it is not expressing actual feelings or emotions in the way that we would think of them, it is just executing its programming, it’s still eerie. I think a lot of people are going to have these moments with this technology where you have these different sides of your brain fighting with each other.

Since that night, I’ve gone looking for answers. I talked to a number of AI experts and tried to figure out what the heck happened here. And I think there are some satisfying answers and some less satisfying answers.

The satisfying answers are more in the category of, “Yeah, of course, this thing was creepy and weird to you because you were asking it questions about its shadow self for goodness sake. What did you expect?” The answers that put my mind less at ease are when you ask even Microsoft “Why did this happen?” and they just can’t tell you because these things don’t explain themselves. They’re essentially black boxes. And I think that’s something that we all have to get our heads around as a society.

How large language models work.

Kevin: On a basic level, these large language models, as they’re called, work by ingesting a massive amount of data. These models are trained on billions of what they call parameters, which are massive amounts of text, everything you can imagine—books, articles, Wikipedia pages, message boards, social media posts. All that gets fed into this giant machine—this language model—and the model learns to map and understand the relationships between different words and concepts. Then they use that information to predict what word should come next in a sentence.

“[Language models] are word prediction machines.”

If you ask it, “What are some top landmarks to visit in Paris?” It’s not going out and looking on websites for that information. These language models are basically just taking that question, running it through their map of words and concepts, and saying, “In the sentence ‘the five best landmarks to see on a trip to Paris are?,’ what are the five things that are most likely to come next in that sentence based on all of the training data that we’ve ingested?”

That’s how you can think about these language models. They’re word prediction machines.

You can’t teach an old dog new tricks. The same may not be true of AI.

Rufus: I’ve heard some people say that there’s a view that as the data sets become larger and larger, we’re starting to see a little more volition or intentionality or opinion from these models. This is highly contentious.

Kevin: What researchers have discovered is that with this technology, the more data you put in, even without changing the underlying structure of how it works, you get it to do different kinds of things.

The first large language models were fairly small by today’s standards. And if you tried to have a conversation with it, maybe it would sound halting and strange. But as they put more data into it, the conversations got better. And then they started finding all these other emergent properties of these models. They discovered that not only could they write prose, but they could code just by ingesting massive amounts of code from the open internet. They could actually learn how to program.

Rufus: It’s astounding. And as I understand it, the difference in scale between ChatGPT-3 and ChatGPT-4 is 500x.

Kevin: I don’t know if the difference is that great, but it is true that these models are getting bigger, and they’re ingesting more and more information, and as they do, they seem to take on new abilities.

“Eventually there was a big moment where it beat the best human Go player.”

My favorite example of this is actually with something called Alphafold. Many years ago, scientists at a company called DeepMind, which was a Google AI subsidiary, built something called AlphaGo. This was an AI that was trained to play the board game Go. And it got very good at that. And eventually there was a big moment where it beat the best human Go player. And then they started thinking, Well, what else could this beat humans at? What else could this be good at? And they started taking this basic technology behind AlphaGo and applying it to different fields. And they found something astounding. The same basic technology could be used to predict the structures of human proteins. You could take an amino acid sequence, throw it into this model, and it could predict with really good results. That was not only a big breakthrough in AI, it was a big breakthrough in molecular biology. I mean, traditional biologists had been vexed by this problem for decades. And in comes this board game playing AI algorithm, and it essentially solves it.

AI will create jobs. But it’ll eliminate jobs, too.

Rufus: How do you think about the positive use cases for AI as it evolves?

Kevin: There are a number of really positive applications. One of them is AI in medicine and drug discovery. If this technology can help us come up with new drugs and new cures for disease, if it can help doctors better spot tumors on scans, then that is really exciting. I also think that in our jobs there’s a lot of work that really isn’t all that fun or interesting, but that we still have to do. I’m thinking about filing my expenses, which is something that I’m way overdue on. Maybe there’s some AI out there that can just take care of that for me.

It’s also true that it’s going to come up with some tasks that we didn’t even anticipate. I mean, that always happens with new technology, right? And so I think there are going to be many jobs created because of AI, but I also think there are going to be a lot that are no longer going to be necessary.

AI doesn’t have to be sentient to be scary.

Kevin: AI doesn’t have to be sentient to be scary. We have this idea that we’re waiting for this moment where the AI turns sentient and turns on us, and that’s when we really start to have to worry. What the past little while has shown me is that there are all kinds of ways that AI could disrupt society even if it just stays at its current capacity and never gets any better. I think we are already across the Rubicon into a pretty weird place. After this conversation I had with Bing, can you imagine this kind of technology being used by someone who is lonely or depressed or has violent urges and is being egged on by the algorithm?

Rufus: There’s a chorus of people who believe that we should collectively agree not to pursue artificial general intelligence. What do you think?

Kevin: I think the die is cast on this unfortunately. I don’t like to be a fatalist and throw up my hands and say there’s nothing we can do, but I think there is this push toward AGI that is happening. I don’t know that I believe that AGI is the threshold that a lot of people seem to believe it is.

We already have domains in which computers are better than us. Chess is one. Protein folding is another. So I think rather than there being one moment where we get AGI all at once—like, on Monday we go to sleep and we don’t have AGI, and then on Tuesday we wake up and we do have AGI—I just don’t think that’s how it’s going to happen. I think it’s more that the number of domains where we have an advantage over AI is going to shrink. And at some point, there will be a vague tipping point where more of the things that we do on a daily basis are areas like chess where we will always be beaten by AI. And then our role has to shift.

Edited and condensed for clarity.

You May Also Like:

- Iain Thomas and Jasmine Wang share 5 key insights from their book, What Makes Us Human: An Artificial Intelligence Answers Life’s Biggest Questions.

- Max Fisher shares 5 key insights from his book, The Chaos Machine: The Inside Story of How Social Media Rewired Our Minds and Our World.

- Chris Bailey shares 5 key insights from his book, How to Calm Your Mind: Finding Presence and Productivity in Anxious Times.

- David Sax sits down with the Next Big Idea podcast to discuss his book, The Future Is Analog.

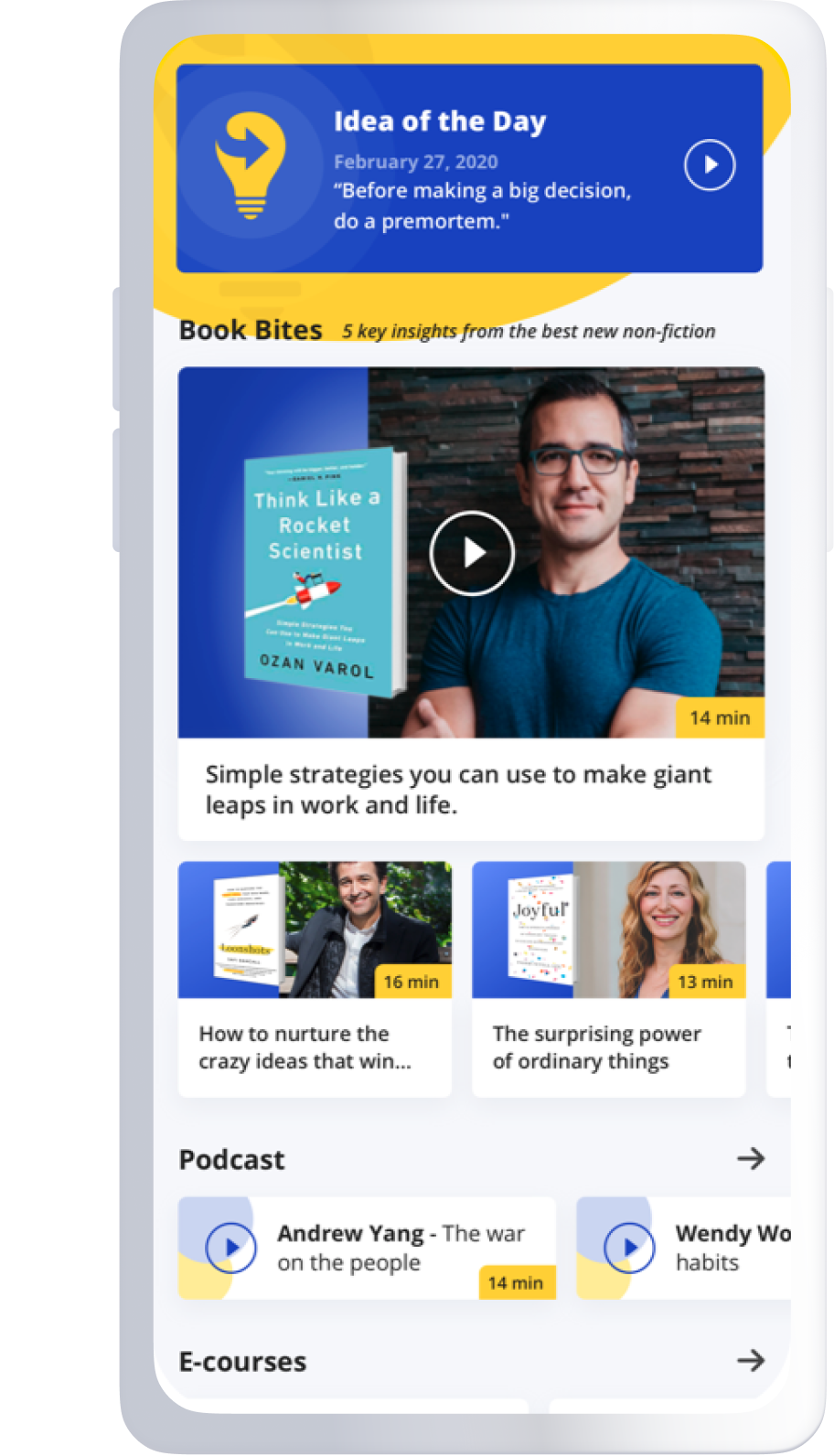

To enjoy ad-free episodes of the Next Big Idea podcast, download the Next Big Idea App today: