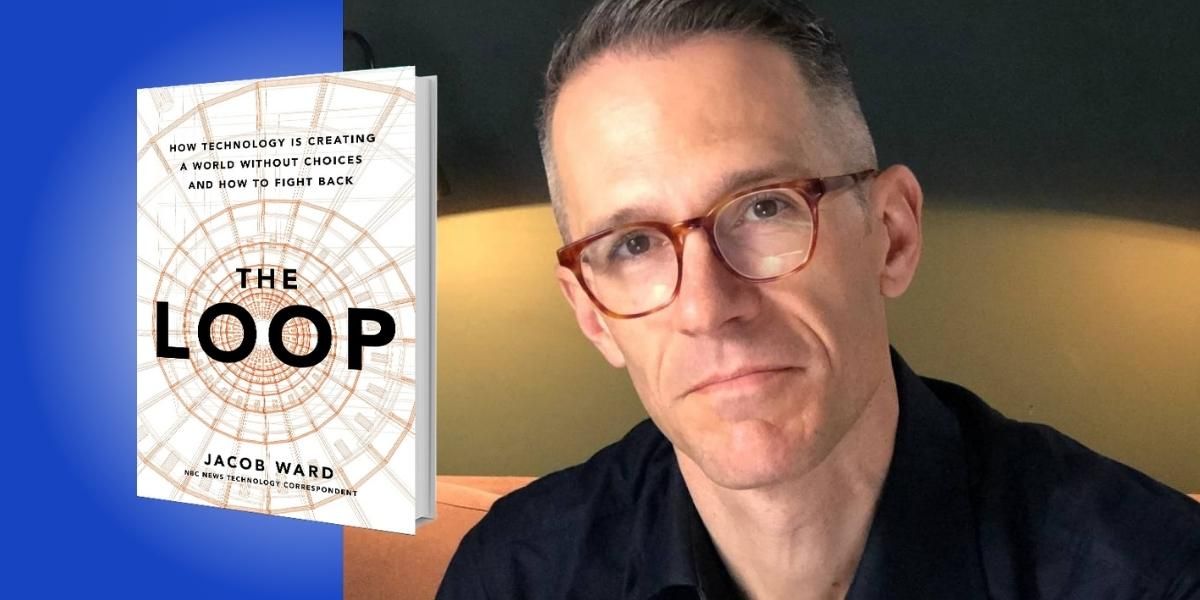

Jacob Ward is technology correspondent for NBC News, and previously worked as a science and technology correspondent for CNN, Al Jazeera, and PBS. The former editor-in-chief of Popular Science magazine, Ward writes for The New Yorker, Wired, and Men’s Health.

Below, Jacob shares 5 key insights from his new book, The Loop: How Technology Is Creating a World Without Choices and How to Fight Back. Listen to the audio version—read by Jacob himself—in the Next Big Idea App.

1. Human behavior is pattern-based.

We make decisions using systems that rest on ancient evolutionary gifts. The last 50 years of behavioral science have established that, in a way, the brain can be thought of as two brains: a fast-thinking brain and a slow-thinking brain. This idea was popularized in Daniel Kahneman’s book, Thinking, Fast and Slow.

The fast-thinking brain is the one we’ve had for around 30 million years. We have aspects of it in common with primates and monkeys, and it is the system that allows us to make rapid decisions without having to talk it through or blow any calories. So, imagine you and I are sitting together and suddenly a big snake slithers into the room between us. Now, we wouldn’t sit there and talk it out, right? We wouldn’t sit and ask, “What kind of snake is that?” We would leap to our feet and get out of there. Our instinctive decision-making system gets us away from danger. This system allows us to make all kinds of decisions, like what food we’re going to eat, what people were going to trust, and which dangers might slide into our lives.

The other brain is the slow-thinking brain, which is a much more recent development in evolutionary terms. It’s probably only about 70,000 to 100,000 years old. This is the system that we use to make creative, cautious, rational decisions, and it’s super glitchy, according to the people who study it. It’s the system that we use to invent things like law and art. It’s also central to what we think of as being human. Researchers have discovered that we use our fast-thinking, instinctive brain most of the time, but we think we’re using our creative, cautious, rational mind most of the time. Pattern-based behavior turns out to have everything to do with using our fast-thinking brain. When you put that brain under pressure and starve it of some essential information, it makes all kinds of decisions in ways that we can predict using formulas. Those predictable patterns in human behavior make us ripe for exploitation by pattern-based systems like AI.

“Researchers have discovered that we use our fast-thinking, instinctive brain most of the time, but we think we’re using our creative, cautious, rational mind most of the time.”

2. Companies are using AI to predict patterns you don’t even know you have.

You can experience this in many ways in your day-to-day life. If you let Spotify recommend a song to you, it will probably do a pretty good job. If you watch a YouTube video, the next one the platform recommends will probably be one that you would be interested in watching. There’s almost no category of technologically-driven company that isn’t trying to predict what you will do next, and they’re increasingly using AI for that purpose. A lot of the top technology companies are employing people that are doing the sort of behavioral research that led us to understand that we are pattern-based. Those researchers in their ranks are helping to refine these systems to better predict things.

This deployment by companies is taking forms we never saw coming. It’s not just being used to recommend a song or a video you might like; it’s being used to recommend who gets a job, who gets a loan, or who gets bail. In theory, all of these things can be done by a pattern-recognition algorithm. The problem is that those algorithms are not as smart as we think they are, and we basically get addicted to using them. Through over-reliance, we would approach a place where we won’t know how to make those decisions without that kind of system involved, and those systems are going to shape our behavior in invisible ways that we will irresistibly follow.

3. AI is a lot simpler than you think.

AI is really just an ability to take an unstructured set of variables, a big mess of data that a human being could never take the time to look through, and find patterns. It will discern that if X and Y conditions are met, then Z outcome is probable. Take, for example, a system that distinguishes between dogs and cows. Let’s say you’ve got a room full of dogs and cows, and you ask the AI system to distinguish between them. It would find whatever variables it could that best predict the difference. What’s missing from so much of what we talk about when we talk about AI is understanding how simple its process is.

“AI makers don’t really know how their AI works most of the time.”

An AI might look at dogs and cows and find the easiest variables to distinguish between those two categories, such as size or basic behaviors, and so it renders a verdict. It says this is a cow, and this is a dog. The problem is we humans look at that and think, wow, this thing is amazing. It’s a dog and cow expert! But the truth is, it doesn’t actually know anything about dogs and cows. It only knows the bare minimum information it needs for picking patterns that allow it to tell the difference.

This is true no matter what you throw at an AI system. If you have an AI system trying to determine which person in a photograph is most likely to be a security threat, it’s going to use the same comparatively stupid way of drawing a distinction that it might use to draw a distinction between dogs and cows. Yet when you ask the makers of an AI system how their algorithms know the difference between these two things, the creators may not know. They are not legally required to know, and it’s not a technical requirement for the system. AI makers don’t really know how their AI works most of the time.

In many surveys, it’s been shown that leaders of companies who rely on AI systems to make important decisions don’t care how it works because it works well enough. Combine all that with the fact that we humans are prone to believing the verdicts of systems we don’t understand, and you wind up in a dangerous situation. We take, on faith, the verdict of relatively simple systems that no one is able to actually look inside.

4. Good AI is perfectly possible, but money tends to ruin it.

When I speak to people who want to deploy AI for socially beneficial reasons, they complain that their clients—the museums, governments, and social service agencies that could benefit from AI—don’t have the money or the sophistication to deploy it. For instance, one researcher was telling me that if you gave him an AI system and access to all of the birth certificates issued in the United States each year, he could specifically identify which apartments need to be stripped and repainted to avoid lead poisoning of millions of children a year.

“AI is being deployed predominantly for financial gain, but likely its greatest use could be for nonprofits.”

It would be an incredibly useful purpose to put AI to. The trouble is, nobody’s making money taking lead paint out of buildings. Instead, people are deploying AI to do things like figure out which movie script is going to be the most successful with an audience, or mediate between parents who are fighting over child custody. Those are both real uses of AI. There is an experimental system coming out that guides the conversational style between customer service agents and their clients, as well as guiding conversation style between colleagues. AI is being deployed predominantly for financial gain, but likely its greatest use could be for nonprofits.

When we think about your fast-thinking brain and your slow-thinking brain, consider that nobody makes money selling to your slow-thinking, creative, cautious, rational brain. They want to sell to your ancient, instinctive, snake-detection system. They want to trigger your fast-thinking brain to do something unconsciously and instinctively. That is a huge part of how people are making money, and especially making money off of AI.

5. We need to learn our patterns and preserve our inefficiencies.

It is always going to be the case that AI can make us money and save us time by being deployed on a problem. There is no guarantee that it would solve the problem in an ethically viable way, but it could make a problem easier to deal with, like require fewer employees. All sorts of benefits can accrue when you sic AI on a problem.

But as one federal judge put it to me, you could make the act of entering a guilty or not guilty plea far easier than it is now by deploying AI. Right now, you have to go to court, enter a plea in person, and jump through all sorts of hoops. Even though you could make that far more efficient, such as a right-or-left swipe on a phone, you don’t want to do that. The system is designed as it is in order to trigger your slow-thinking brain. You are made to stop and think about it.

There are all sorts of examples of ways we could make modern life more efficient. We could make it more efficient to figure out who to arrest, who gets a job, and who gets a home loan. But should we? Maybe instead we should encode some inefficiencies, by which I mean fundamental human values, that make the difference between our ancient, instinctive selves and our modern selves who are trying to create law and rationality and art and justice. In order to be the best version of ourselves, we may need to push back against technology that is trying to make money off the people we never intended to be.

To listen to the audio version read by author Jacob Ward, download the Next Big Idea App today: