Andrew Smith is a broadcaster and screenwriter with a deep background in journalism. He has worked as a critic and feature writer for the Sunday Times, the Guardian, the Observer, and The Face, and has penned documentaries for the BBC.

What’s the big idea?

A journalist seeks to understand the purpose and cultural implications of coding by investigating computer history, exploring the tech industry, and learning to code himself.

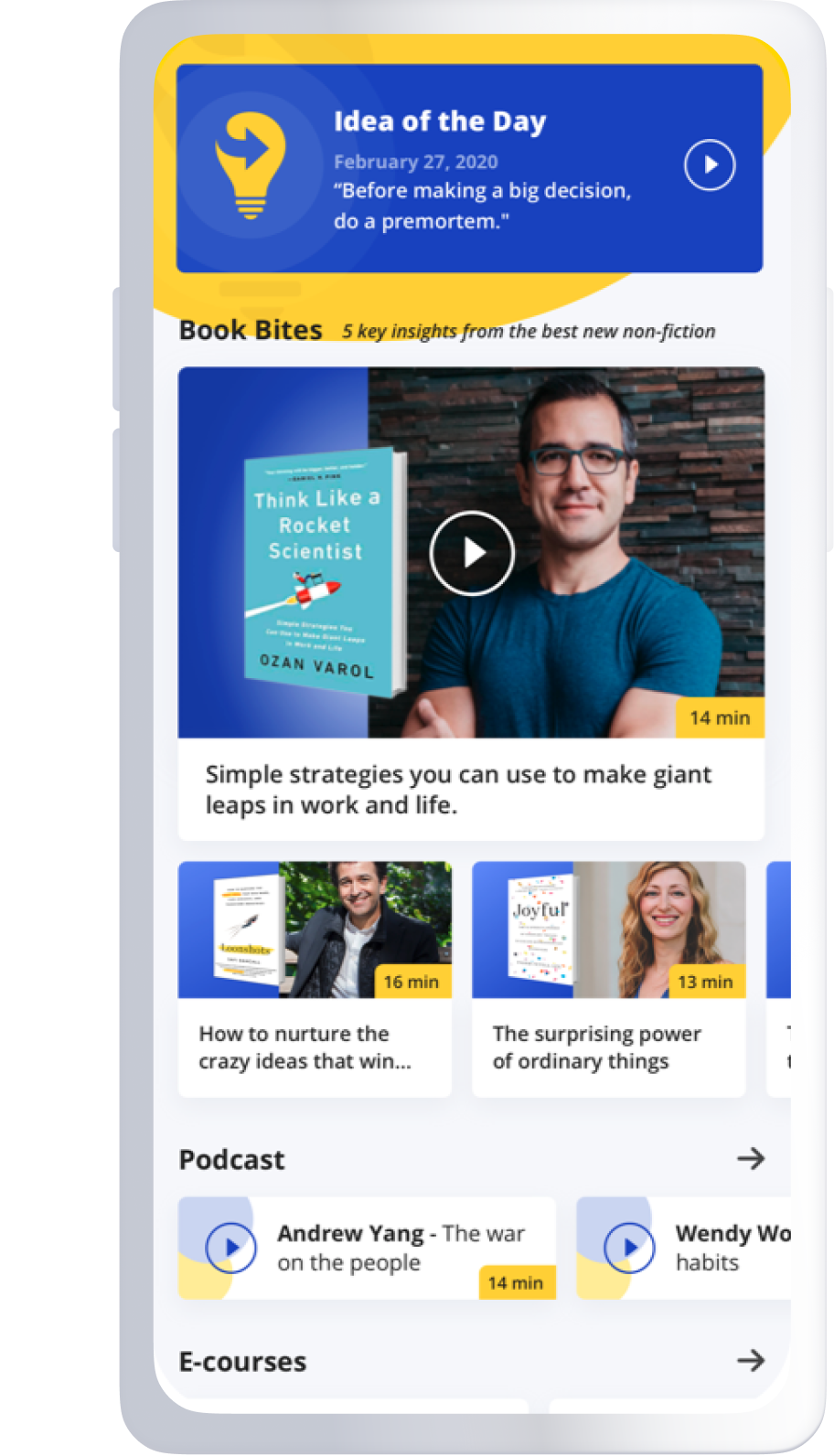

Below, Andrew shares five key insights from his new book, Devil in the Stack: A Code Odyssey. Listen to the audio version—read by Andrew himself—in the Next Big Idea App.

Software engineers will tell you the purpose of their art is to make the world better. One day in 2017, I began to suspect that, overall, this wasn’t happening: that as algorithmic computer code flooded into the world around me, that world was growing nastier, more homogenized, binary, and economically feudal in approximate proportion.

Were the two things connected? I quickly saw two possible conduits for some form of causal relationship. Pop culture had long portrayed programmers and coders as oddballs and misfits reconfiguring society to suit themselves. Fans of the streaming show Slow Horses know what I mean here. Was there any truth to this? Equally, the weirdness and nihilism of code’s hyper-capitalist Silicon Valley incubator was easily imagined as a kind of virus reproducing in whatever it touched.

There was also a third and more sobering possibility. Could there be something about the way we compute, something hidden in the code itself that is innately at odds with how humans have evolved? I knew the only way to find out would be to dive into the machine, into what I came to think of as the microcosmos, by learning to code myself. Over a by-turns terrifying and thrilling five-year period, during which there were many points I feared I didn’t have the right kind of mind for code, I did.

1. The way we compute is deeply eccentric.

The world’s first computer went to work for codebreakers at the British Government Code and Cipher School in Bletchley Park during World War II. The machine, affectionately known as “Colossus,” was designed and built by a brilliant Post Office engineer named Tommy Flowers, to a specification famous computer scientist Alan Turing floated in the 1930s. The machine did as much as anything or anyone to win the war for the Allies.

Colossus and its powerful U.S. successor, the ENIAC machine, were built hurriedly with materials and techniques available to engineers in the 1940s. All that followed built on their legacy, each essentially consisting of a calculator attached to a clock, with every third tick triggering one calculation cycle. The objects of these calculations were streams of binary numbers generated by vacuum tubes functioning as switches, with “off” representing a zero and “on” a one. In the general-purpose computers we use today, these numbers may be used to represent things in the human realm. For instance, the number 60 might symbolize the note middle C in a musical composition. But because microprocessors can only process the world in precise binary terms, the system is brittle, convoluted, inefficient, and tends to de-emphasize complexity. As Winston Churchill noted, “Nature never draws a line without smudging it,” and our present computers do not cope well with smudge.

“I came to regard the digital edifice now surrounding us as perhaps humanity’s greatest collective act of creativity to date.”

This is not to diminish computing’s achievements. As I burrowed deeper into the machine, I came to regard the digital edifice now surrounding us as perhaps humanity’s greatest collective act of creativity to date. Nonetheless, nature is a far more subtle and resilient processor of information than anything humans are likely to develop—and some significant digital pioneers recognized as much. Notably, the Hungarian-American genius John von Neumann (after whom our classical computing architecture is erroneously named) was already thinking beyond the Turing paradigm by the time of his regrettably premature death and appears to have assumed subtler ways to compute would be found as new materials and concepts became available. He would be astonished to find that, so far, they haven’t.

2. Code’s striking diversity problem may be a neurological problem.

By most reasonable estimates, somewhere between five and ten percent of professional coders are female. This affects both the software that gets written and how it gets written. But in the course of submitting to two fMRI brain scans with teams of neuroscientists trying to understand how the brain treats this newest form of input, I came to suspect that coders are being actively—if unconsciously—selected for left hemispheric cerebral bias.

Simplified greatly, the left hemisphere processes tasks requiring close attention to detail, whereas the right is more broadly focused. Taking the example of natural language, syntax is most ably processed in the left hemisphere, which thrives on tight combinatorial logic. In contrast, higher functions like metaphor and humor are recognized and processed solely in the right, which tends to understand the world in terms of relationships rather than abstraction. Left hemispheric dominance is statistically far more common in men (which is related to autism also being at least four times more common in men).

In an ideal world, there would be a more or less equal balance between the hemispheres at the micro, individual, and macro, corporate levels. Collectively, the tech firmament bears all the hallmarks of what the neuroscientist and psychiatrist Iain McGilchrist characterizes as “right hemisphere damage.” The Big Picture may be less easily seen than the short-term advantage by default.

3. For all of tech’s problems, coders have a huge amount to teach us.

In my fifties and with no technical background, I found code deeply uncomfortable at first; I began to suspect that only a certain kind of “coding mind” could process its binary logic happily. In addition, I worried that the alien, linear mental patterns I was forced to internalize would inhibit my pre-coding mind’s lateral, imaginative motion.

But the opposite was true. To code well requires empathy and imagination as much as logic—and an insufficiency of these human qualities underlies most of the more destructive code we see. In addition, code’s extraordinary open-source development system depends on an almost utopian assumption that people want to help each other, and a rising tide lifts all boats. Cooperation, communication, and concern for others are written into the structure and culture of programming languages like Python and Ruby. I frequently find myself at coding events, thinking, “Why can’t the whole world work this way?”

4. We may never see artificial general intelligence.

What we call “AI” is not AI—it is machine learning. From the 1950s onward, computerists have promised that true “AI” is just around the corner, simultaneously overstating their own powers and underestimating biology. The more closely we study rays, cuttlefish, bees, and our own extraordinary brains, the clearer it becomes that our machines are not even close to acquiring comparable abilities.

“What we call ‘AI’ is not AI—it is machine learning.”

In truth, Large Language Model “AI” like ChatGPT may be moving us further away from general intelligence, not closer to it. At present, “AI” is best understood as a marketing term. This is why I feel less concerned about the arrival of superintelligent machines that subjugate us and more fearing their ability to simulate human intelligence while being fundamentally fast but stupid, seducing us into meeting them in the middle, dissolving our intelligence into their dumbness on a promise of convenience. It’s easy to suspect this is already happening.

5. We came within a hair’s breadth of a computing revolution in mid-Victorian England.

Everything that happens inside a computer is predicated on a revolutionary system of logic conceived by a poor cobbler’s son from Lincolnshire, with no formal education, named George Boole.

Boole was born in 1815, within a fortnight of Ada Lovelace, a countess, and daughter of the Romantic poet Lord Byron—herself a brilliant mathematician and close friend of Charles Babbage, the man widely regarded as the father of computing. Lovelace is credited with having seen further into Babbage’s Analytical Engine (which would have been the world’s first programmable computer had it been built) even than its designer.

Between them, this trio held all the knowledge necessary to launch a computer revolution a full century before the one we’re currently straining to accommodate. When Boole met Babbage in the Crystal Palace at the Great Exhibition of London in 1851, the conceptual circuit was completed: at this point, the possibility of a Victorian digital revolution becomes tangible. Sadly, Ada and Boole both died young, while gender and class stratification kept them out of the English academy, where they might have worked more formally with Babbage. Only time will tell whether this was a good or bad thing for humanity, but it is intriguing to consider.

To listen to the audio version read by author Andrew Smith, download the Next Big Idea App today: